The scope of data science solutions grows exponentially each day. It is not surprising if you think of them as tools designed to meet your specific business needs and optimize particular business processes. Data science helps companies make better decisions, and recommender systems help data scientists succeed in it.

Recommender systems are tools designed for interacting with large and complex information spaces and prioritizing items in these spaces that are likely to be of interest to the user. This area of expertise, christened in 1995, has grown enormously in the variety of problems addressed and techniques employed as well as in its practical applications. Personalized recommendations are an important part of many on-line e-commerce applications like Amazon.com, Netflix, and Pandora. The wealth of practical application experience has become an inspiration for researchers to extend the reach of recommender systems into new and challenging areas.

Recommender systems research has incorporated a wide variety of artificial intelligence techniques including machine learning, data mining, user modeling, case-based reasoning and constraint satisfaction to name a few. This article will take stock of the current landscape of recommender systems research and identify directions the field is now taking.

What Recommender Systems are and What They Do

Abstractly speaking, recommender systems (RS) are techniques that provide suggestions for any type of content to be of use to a user being closely related to a decision-making processes. In their simplest form personalized recommendations are offered as ranked lists of items. In performing this ranking, RSs try to predict the most suitable products or services based on the user’s preferences and constraints.

Briefly, all of RSs are based on the following informational components: information about user, information about items and information about transactions (or any similar action). To implement its core function - identifying items useful for the user - a RS must predict that an item is worth recommending. For this, the system has to be able to predict the utility of some of them, or at least compare the utility of some items and decide what items to recommend based on this comparison.

The purposes to use RS may be different:

- Assistance in decision making

- Assistance in comparison

- Assistance in discovery

- Assistance in exploration

RS can also be used to calculate several behavioral aspects of the following user portraits:

- Non-loyal users and popular items

- Non-loyal users and unpopular items

- Loyal users and popular items

- Loyal users and unpopular items

RS can be treated as one of the most efficient tools for business, aimed directly at increasing revenue and profitability as well as optimizing current product portfolio. The following industries showed a rapid demand-led growth for implementing RS solutions:

- Retail business: market basket analysis, sequential patterns mining, user profiling, goods portfolio optimization

- Hotel business and tourism: tour and hotel recommendations based on ratings and user preferences

- Digital content: recommendation of new items based on purchases or visits history

- Movie databases: recommendations based on user’s rating

Let me demonstrate the basics of core recommendation computation as a prediction of utility of an item for a user. Let’s model the utility degree of a user U for an item A as a function R (U, A), as is normally done by considering user ratings for items. The fundamental task of collaborative filtering RS is to predict the value of R over pairs of users and items, i.e., to compute Re (U, A) where Re is estimation, calculated by the RS, of the true function R. Therefore, after computing this prediction for the active user U on a set of items, i.e., Re (U, A1), . . . , Re(U, An) the system will recommend items (A j1 , . . . , A jK (K ≤ N) with the largest predicted utility. K is typically a small number, much smaller than the cardinality of the item data set or the items on which a user likeness prediction can be computed, i.e., RSs “filter” the items that are recommended to users.

Types of Recommender Systems

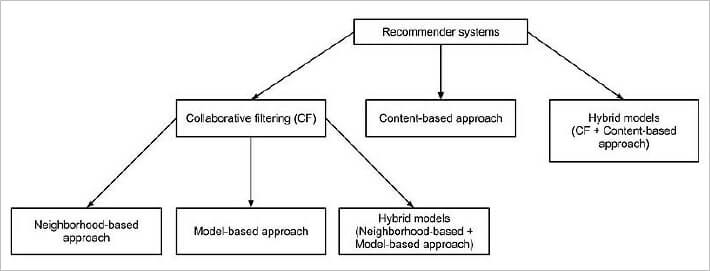

Typically, recommender systems are classified according to the technique used to create a recommendation:

Content-based systems examine item properties to recommend items that are similar in content to the items the user has previously liked or matched the user’s attributes.

For instance, music recommendations will ground on with the same groups (singers), produced on same label, genres, etc.

Textual content (news, blogs, etc.) recommend other sites, blogs, news with similar content and so on.

In Collaborative Filtering (CF) a user is recommended items based on the past ratings of all users collectively. CF can be of two types – User-based and Item-based.

User-based CF works like this: take a user U and a set of other users D whose ratings are similar to the ratings of the selected user U and use the ratings from those like-minded users to calculate a prediction for the selected user U.

In Item-based CF you build an item-item matrix determining relationships between pairs of items and using this matrix and data on the current user, infer the user's taste.

Hybrid models can be generally divided into two approaches:

- Implementing two separate recommenders and combining predictions

- Adding content-based methods to CF:

- Item profiles for a new item problem

- Demographics to deal with a new user problem

The main goal of data mining for RS is to extract information from a data set and transform it into an understandable structure for further use. This transformation includes database and data management aspects, data pre-processing, model and inference considerations, interestingness metrics, complexity considerations, post-processing of discovered structures, visualization and on-line updating.

Data Mining Approaches in Recommender Systems

Knowledge and data discovery for a recommender system consists of the following steps:

- Selection

- Pre-processing

- Transformation

- Data mining

- Interpretation/evaluation

The key machine learning algorithms used in building a recommender system are:

- Association rules mining. A data mining technique to find relations between variables, initially used for shopping behavior analysis.

Associations are represented as rules: Antecedent → Consequent (if a customer buys A, that customer also buys B). Association between A and B means that the presence of A in a record implies the presence of B in the same record. i.e User A buys: {Beer, chips} → Bacon.

Most frequently used associations rules mining algorithms are Apriori and FP-Growth. - Slope one algorithm. An algorithm based on ratings which users gave to any set of items, and aimed to predict rating for a “blank” item – meaning to forecast how the current user could have rated it.

- Decision tree algorithm. A decision tree is a collection of nodes, arranged as a binary tree, constructed in a top-down approach. The leaves of the tree correspond to classes (decisions such as likes or dislikes as well as buys or not buys). Each interior node is a condition that corresponds to features, and branches to their associated values.

To classify a new instance, we observe the features tested at nodes of the tree and follow the branches corresponding to their observed values. As we reach the leaf, the process terminates and the class at the leaf is assigned.

Most frequently used decision tree algorithms are CART and C4.5.

Step-by-step Workflow of Using the Association Rules Technique

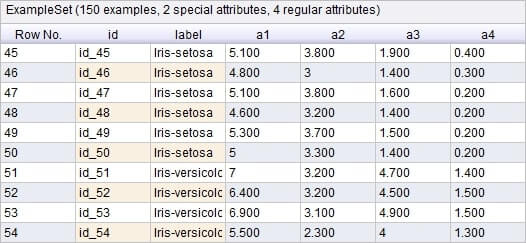

The dataset chosen for research is the classical data mining Iris dataset. Let’s take 150 items distributed into 4 labeled classes, each of which has 4 attributes. Our goal is to find association rules within items in the dataset provided.

Then, link the value to the class; let’s imagine that each value has 5 ranges, and since the value lies in one of them, four ranges are “false” and one is “true”. This example can be applied to any practical situation – e.g., we have an item with 4 attributes and 5 options for each of them.

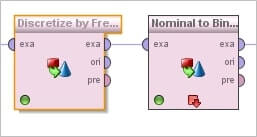

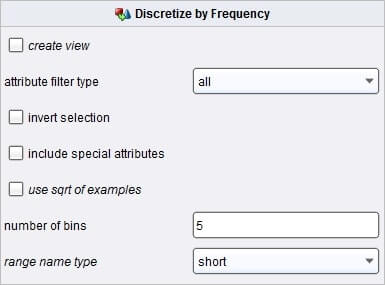

Here we use the Discretize by Frequency operator (with options of 5 bins), and then the Nominal to Binominal step.

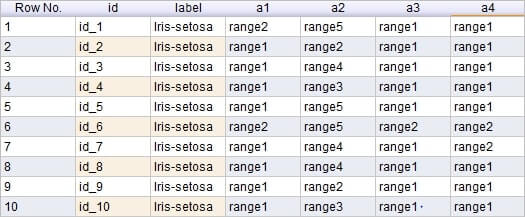

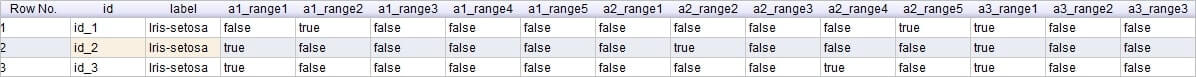

This is the result after Discretizing – we receive ranges for each attribute:

Then we get a Binominal representation of attributes – as long as we have chosen 5 bins, 4 of them are to be false, and one – true.

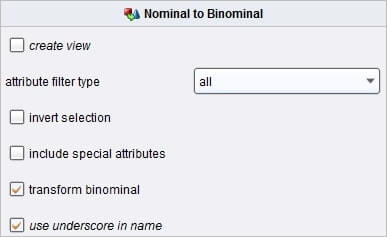

The “transform binominal” option is selected as basic. “Use underscore” means that we completely keep all attributes of non-processed data.

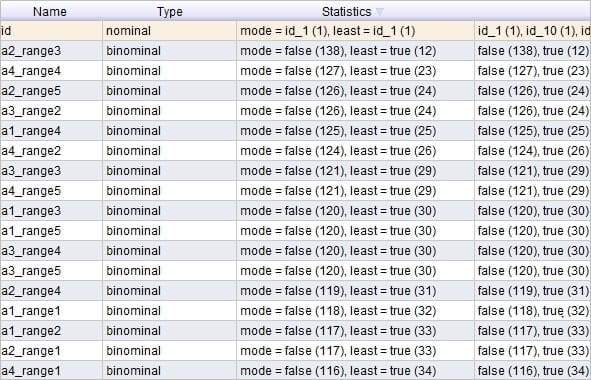

The overall metadata view in shown in a descending order for ranges “true” and “false.”

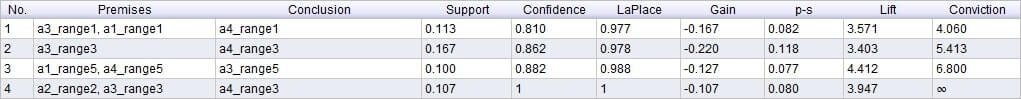

By applying the FP-growth Operator, we get buckets with 2 criteria and 3 criteria respectively. The support value represents the amount of support for a particular case.

So, we receive a list of association rules that show us Consequent criteria for a set of 2 Antecedent items. The threshold for confidence is 0.8.

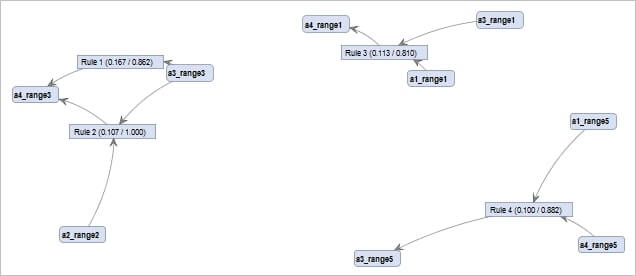

Here is the graphical representation of the obtained results:

Key parameters defined:

Premise. An antecedent set of items.

Conclusion. A consequent item.

Support. The support of an item set is defined as the proportion of transactions in the data set which contains the item set.

Confidence. An estimate of Probability (Y | X), the probability of observing Y given X.

Lift. A ratio that shows independency between Y and X.

So, as you can see from the test set, there are 4 rules we can rely on due to high confidence chosen. If we need more results but with less reliability, we can lower the threshold: if we set the threshold to 0.6, we get 21 results. In practice, a usually recommended parameter lays between 0.75 and 0.9, depending on the case we work on.

Considering the rapid rise in recommender systems popularity, we wouldn't be surprised to find that your visit to this article is a result of a recommender system’s work.

Would you like to leverage the potential of recommender systems for your business? Feel free to contact our data science team for a consultation.

Related Insights